The Intersection of High-Performance Computing and AI: What Hiring Teams Should Know

03 Dec, 20255m

Your machine learning teams are stuck: their models are too large, the AI training time is too long, and your current cloud setup cannot achieve the deep learning scaling necessary for advanced R&D. The traditional distinction between data science and infrastructure engineering has collapsed, and without experts in HPC and AI convergence, your computational bottlenecks will halt innovation. Hiring managers must understand that AI infrastructure is now synonymous with high-performance computing.

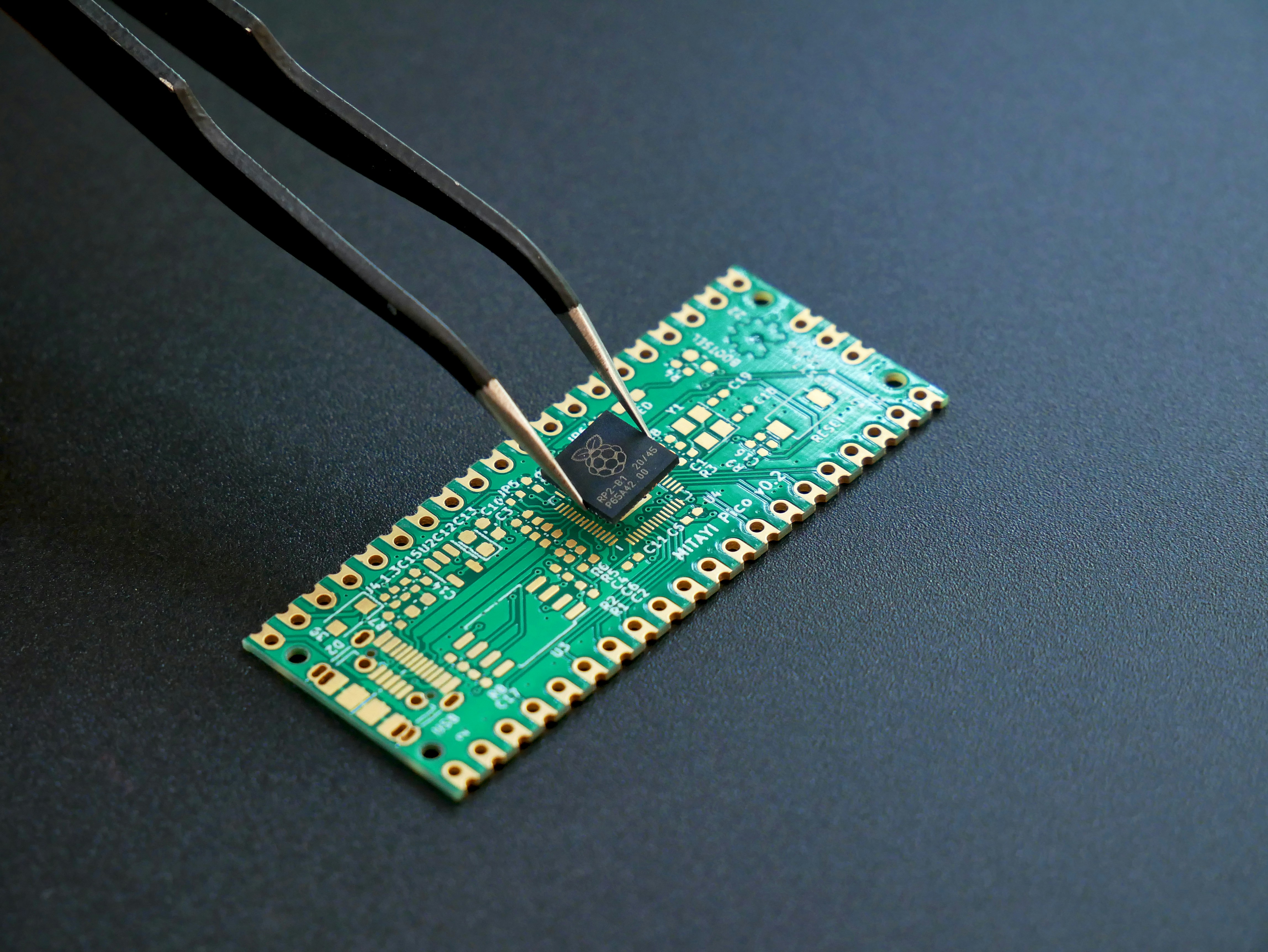

The transition from single-server model experimentation to massive compute clusters requires engineering talent fluent in parallel computing and specialized hardware, such as the NVIDIA DGX system. This guide clarifies the specific intersection points of HPC and AI and outlines the critical skillsets required to move your deep learning projects from proof-of-concept to production at scale.

Key Takeaways:

- HPC and AI intersect primarily at the requirement for high-throughput GPU acceleration and specialized, low-latency network interconnects for data transfer.

- Deep learning scaling relies heavily on model parallelism and data parallelism techniques, which are foundational concepts borrowed directly from Parallel computing.

- Hiring teams must look for expertise in managing AI infrastructure, specifically the configuration and optimization of compute clusters utilizing hardware like the NVIDIA DGX.

- The core challenge in AI training large models is managing synchronization and communication across thousands of cores, making HPC networking skills essential.

The Computational Mechanism

High-Performance Computing (HPC) provides the essential framework for solving the extreme computational demands of modern machine learning.

How does HPC accelerate AI training?

HPC accelerates AI training by providing a mechanism for Parallel computing where computational tasks are divided and executed simultaneously across many processors. The logistical mechanism relies on efficient hardware, like NVIDIA DGX systems, and high-speed network fabric (e.g., InfiniBand) that dramatically reduces the latency required to synchronize the massive data and gradient updates inherent in deep learning scaling.

Why do ML teams need HPC engineers?

ML teams need HPC engineers because successful deep learning scaling requires expertise in distributed system architecture, which data scientists often lack. HPC engineers specialize in optimizing AI infrastructure to handle model parallelism (splitting the model across multiple devices) and data loading pipelines, ensuring that the expensive compute clusters are utilized at maximum efficiency, minimizing idle time and cost.

Is AI replacing HPC workloads?

No, AI is not replacing HPC workloads; rather, it is becoming the single largest consumer of HPC resources and expertise. The logistical relationship is synergistic: AI drives the demand for more powerful compute clusters and specialized hardware, while traditional HPC experts provide the foundational parallel computing knowledge necessary to manage and optimize these systems for modern AI training tasks.

How to Recruit HPC Engineers for AI Infrastructure

To successfully recruit engineers who excel at the intersection of HPC and AI, prioritize candidates with specific experience in distributed AI infrastructure deployment.

Define Model Parallelism Experience - Boldly screen candidates on their practical experience implementing model parallelism or pipeline parallelism techniques in frameworks like PyTorch or TensorFlow, ensuring they grasp the necessary network and memory optimization.

Verify GPU Acceleration Expertise - Ask for direct experience managing and optimizing resource allocation within large compute clusters, particularly those utilizing specialized hardware like the NVIDIA DGX for maximum GPU acceleration.

Audit Parallel Computing Skills - Check for deep knowledge of inter-process communication libraries (like MPI or NCCL) to validate their understanding of how to manage distributed data synchronization during large-scale AI training.

Secure Your HPC and AI Infrastructure Experts

Contact Acceler8 Talent today to immediately secure the specialized HPC and AI engineering talent required to achieve breakthrough deep learning scaling and optimize your critical AI training efficiency.

Author Bio

The Acceler8 Talent Team is a specialist Technical Recruitment Strategy provider with Acceler8 Talent. They advise leading tech companies on hiring specialized engineers who solve critical performance bottlenecks in areas like HPC and AI infrastructure and parallel computing.